|

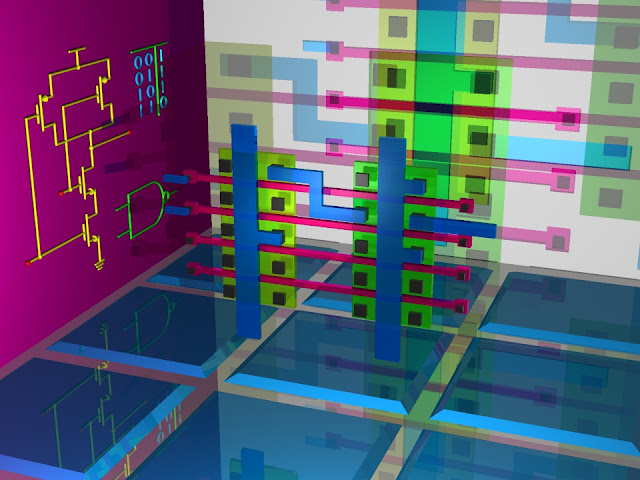

| Deus Ex Machina – Example Mandelbox fractal image I created. |

I’ve previously posted some images of

Mandelbox fractals, so this time I’ll write more about them and provide a

video I made of various flights through the Mandelbox.

The Mandelbox is a folding fractal,

generated by doing box folds and sphere folds. It was discovered by Tom

Lowe (Tglad or T’glad on various forums). The folds are actually rather

simple, but surprisingly, produce very interesting results. The basic

iterative algorithm is:

if (point.x > fold_limit) point.x = fold_value – point.x

else if (point.x < -fold_limit) point.x = -fold_value – point.x

else if (point.x < -fold_limit) point.x = -fold_value – point.x

do those two lines for y and z components.

length = point.x*point.x + point.y*point.y + point.z*point.z

if (length < min_radius*min_radius) multiply point by fixed_radius*fixed_radius / (min_radius*min_radius)

else if (length < fixed_radius*fixed_radius) multiply point by fixed_radius*fixed_radius / length

else if (length < fixed_radius*fixed_radius) multiply point by fixed_radius*fixed_radius / length

multiply point by mandelbox_scale and add position (or constant) to get a new value of point

Typically, fold_limit is 1, fold_value

is 2, min_radius is 0.5, fixed_radius is 1, and mandelbox_scale can be

thought of as a specification of the type of Mandelbox desired. A nice

value for that is -1.5 (but it can be positive as well).

There’s a little more to it than that,

but just as with Mandelbrot sets and Julia sets, the Mandelbox starts

with a very simple iterative function. For those who are curious, the

fold_limit parts are the box fold, and the radius parts are the sphere

fold.

One of the parts that is left deals with

what’s called ray marching. Since these types of fractals don’t have a

simple parametric equation that can be easily solved without the need

for iterations, etc., one must progress along the ray and ask “are we

there yet?”. To help speed up this progression, an estimate of a safe

distance to jump is calculated (using a distance estimator). Once the

jump is made, the “are we there yet?” question is asked again. This goes

on until either we get close enough or it’s clear we will never get

there. The “close enough” part involves deciding ahead of time how

precise we want the image to be. Since fractals have infinite

precision/definition (ignoring the limitations of the computer, of

course), there’s no choice but to at some point say “we’re close

enough”. This basically means we’re rendering an isosurface of the

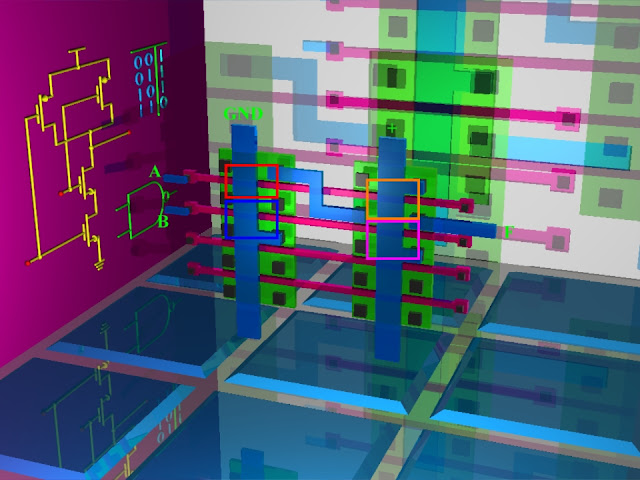

fractal. To see what I mean, refer to both my “Deus Ex Machina” image

and my “Kludge Mechanism” image. Kludge Mechanism uses a less precise

setting and therefore has less features.

|

| Kludge Mechanism – Example Mandelbox fractal image I created |

The ray marching technique (and distance

estimator method) can be used to create a Mandelbulb, 4D Julia, Menger

Sponge, Kaleidoscopic IFS (KIFS), etc. as well as non-fractal objects

like normal boxes, spheres, cones, etc. But many of the non-fractal

objects are better and faster calculated with parametric equations.

Now for the fun part (hopefully). Here’s

a video I made using my raytracer. It shows various Mandelbox flights

and even a section varying the mandelbox_scale from -4 to -1.5

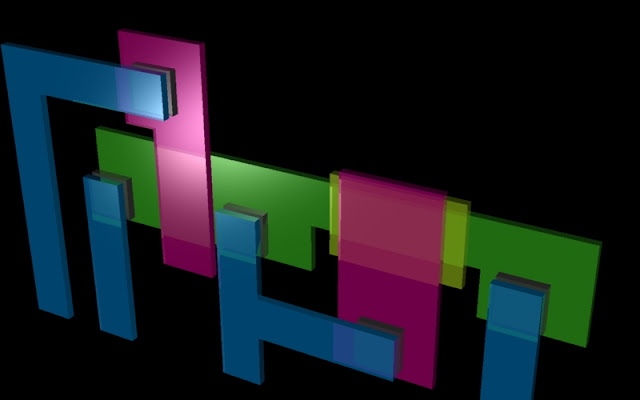

One of the flights points out an

interesting by-product of an attempt to speed up the ray marching. One

can specify a bound radius in which anything outside of that radius

doesn’t need to be run through the ray marching process. In my

“Futuristic City Under Construction” flight, I accidentally set the

bound radius too small which cut out some of the Mandelbox. But, in this

case, it was, in my opinion, an improvement because it removed some

clutter.

|

| Futuristic City Under Construction (Small Bounding Radius) |

| ||||

| Futuristic City Under Construction (Correct Bounding Radius) |

I’ve also created another video showing

just Deus Ex Machina but with higher resolution, more detail, and more

frames. Even though it’s 1080p, I recommend using the 720p setting.

And another video showing just Futuristic City Under Construction but with much better camera movements, further flight, more detail, more frames, and 16:9 aspect ratio.

To better view these videos on my YouTube channel (with full control) go to: http://www.youtube.com/MrMcSoftware/videos

A lot more can be said on the subject of Mandelbox fractals, as well as a lot more images I created, but this will do for now.