|

| A raytraced virtual art gallery I created using a raytracer I wrote. It includes drawings of girls I drew |

I've often thought that if, for some reason, I ever ended up being a substitute teacher, I would offer the students a choice. Do the boring assignment their normal teacher wanted them to do - and let's face it, nobody feels like doing that when the teacher's not around - or learn how to do what makes Pixar films, for example, possible. Let's assume they chose the latter. Then I would show them how to write a raytracer. Of course, since, let's assume, this is not a computer programming class (in which case I would be preaching to the choir anyway), not everyone would know how to program a computer or know the language I would be using. So, most of the stuff would have to be "spoon fed" to them.

One might wonder, "Well then, what's the point?" The point is that raytracing not only covers the subjects of computer programming, but also math, physics, art, language, and depending on how the raytracer is used, chemistry, biology, and many other fields. So, hopefully, it would be a way of showing how all those subjects are actually useful in the real world. And once the students see how easy it was, admittedly because of the spoon feeding mentioned earlier, and see the nice results, who knows what interests might be sparked. Perhaps, unrealistic wishful thinking, but hey, no one wanted to do the assigned work anyway. I bet the normal teacher and the administration wouldn't like me, but who knows. Plus, I've read that the "new way" of teaching in this era of Google, smartphones, and spell check is "how to think" rather than just facts and dates.

So, you might be thinking, "Ok, I'm sold, so what is raytracing?". Well, imagine looking out your window, scanning every inch of your window as you look out at what's outside. You see a car with shiny chrome with reflections of nearby objects. And someone left a glass of water on the car. You can see what's on the other side of the glass, through the glass and water - it looks distorted or bent. You see shadows. You see some parts of the car getting more sun than other parts. In a sense, you've just raytraced. What a raytracer does is send out mathematical rays of light out from the viewer's eye (or camera) through a screen (in the mathematical sense) searching for the nearest object (in the virtual scene) in the ray's path. When a ray hits an object that is reflective and/or refractive it uses the law of reflection and Snell's Law to determine how the ray is to be altered. These laws are two of those things in Physics class you thought you would never see again. Once the ray is altered in the appropriate manner, it once again searches for the nearest object in its path, until there's nothing left in its path, or a limit of how much to search is reached. That covers computer programming (the raytracer has to be coded in some computer language), math (the calculations), and physics (the laws of light rays).

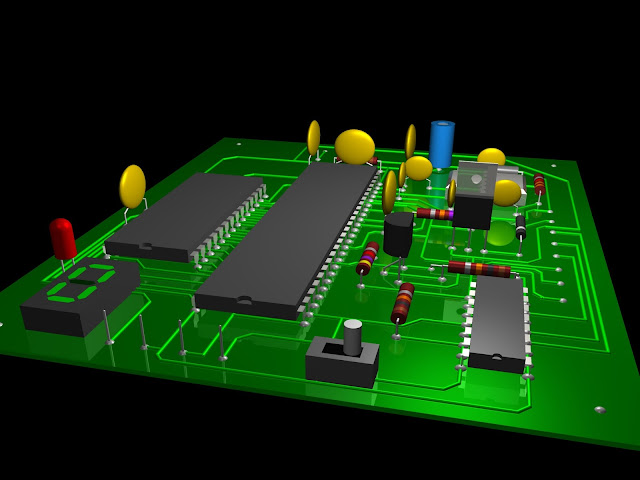

Now for the other subjects. The obvious connection to art is color. In computer monitor terms, for example, every color is really a combination of the right amounts of red, green, and blue. In computer printer terms, every color is a combination of yellow, cyan, and magenta. This difference has to do with whether the medium is additive or subtractive. For raytracing, though, red, green, and blue are used. There are other color models which make calculating colors easier. For example, in many of my raytraced scenes that appear on my websites, I used the Hue, Saturation, and Value color model to add a gradual progression of colors through the spectrum. I also like the look of metal, so many of my scenes have a metallic look. This would involve art (color of certain metals) but also physics again (light characteristics of metals). Art is also applicable to the building of complex looking objects using a combination of simple objects. For example, in its simplest form, a car can be made from a box and four circles. Not a very good looking car, but everyone could imagine it's a car. Put in a few more boxes, some tori (doughnuts), some cylinders, and probably some spheres (balls), and it starts to look more like a car. Language comes into play here. You have to have some way of specifying the scene. Box would be a noun. White, for example, would be an adjective. Other scripting constructs could be thought of as verbs. I realize this is stretching it a bit to say that this would help with grammar, but it does emphasize the importance of adjectives and nouns.

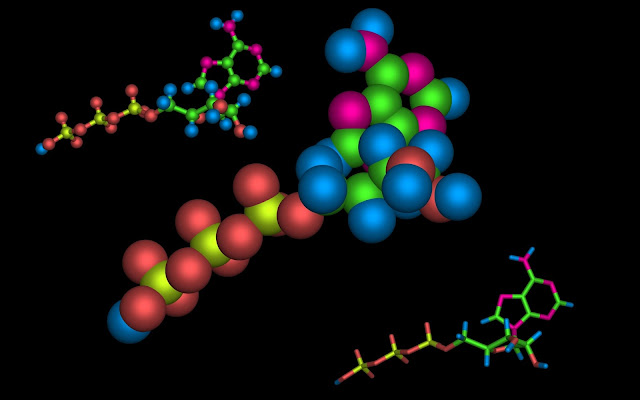

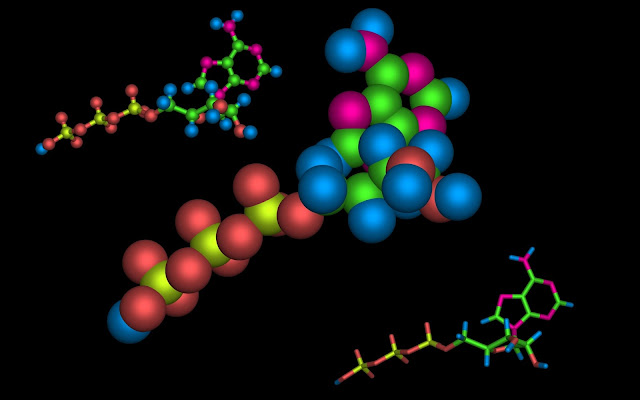

Chemistry comes into play as one of the uses of raytracing, in the form of modeling complex (or simple) molecules. As anyone who's seen a Pixar film would know, the characters in the film move (usually) in a human manner. The human skeletal system is studied to make the characters walk in a more believable manner. Thus, biology comes into play. The method of raytracing has even been applied to sound instead of light, to model how sound propagates through a hallway, window, door, etc. and bounces off walls.

Raytracers can range from the very simple to the very complex. I've even seen a raytracer's code printed on a business card. That shows how simple a raytracer can be to write (of course, any raytracer that short would be very limited and not very useful). I wrote my raytracer on my Commodore Amiga computer but I would often run it on a SUN Workstation (UNIX) because my Amiga wasn't fast enough for complex scenes. I eventually ported my raytracer to MS-DOS, Windows, and Linux / X-Windows. It's come a long way throughout the years. It didn't take years to write, of course; I did many other things between my excursions with my raytracer.

In case you're sold on raytracers but not on writing your own, there are plenty of commercial and free raytracers. POV-Ray being an excellent free one. Well, happy raytracing!

|

| A raytaced set of colored glass Moebius rings. Follow the color coding to see the twisting of the big ring. |

|

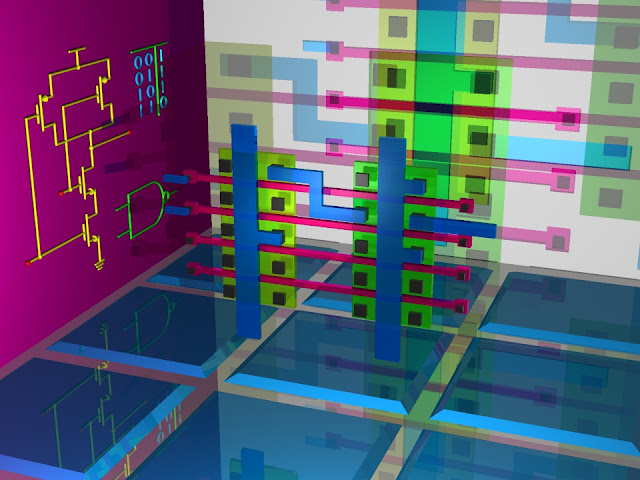

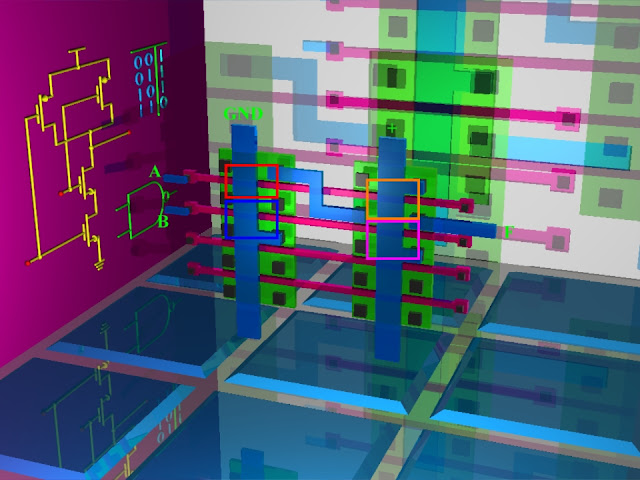

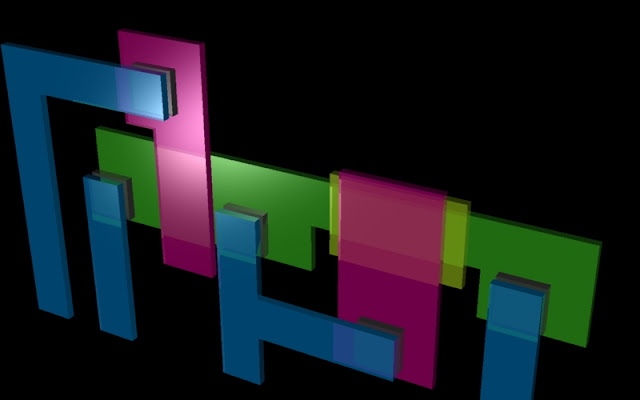

| A raytraced colored glass integrated circuit mask layout of a NAND logic circuit made from a gate array. |

|

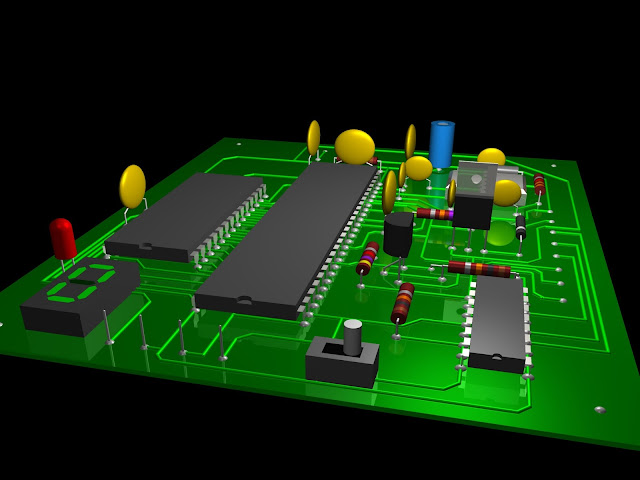

| A raytraced printed circuit board (PCB) |

|

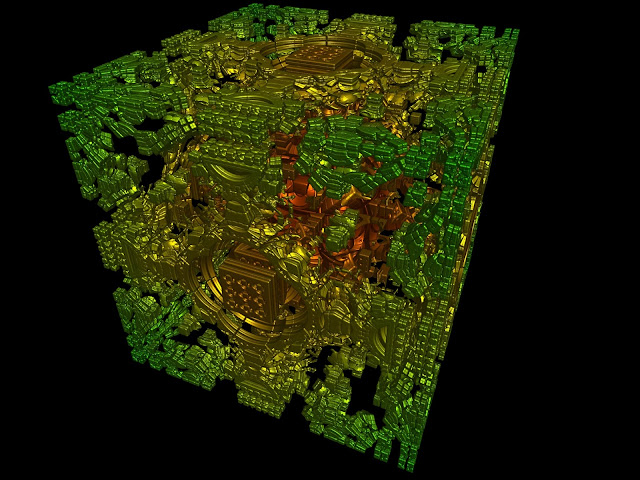

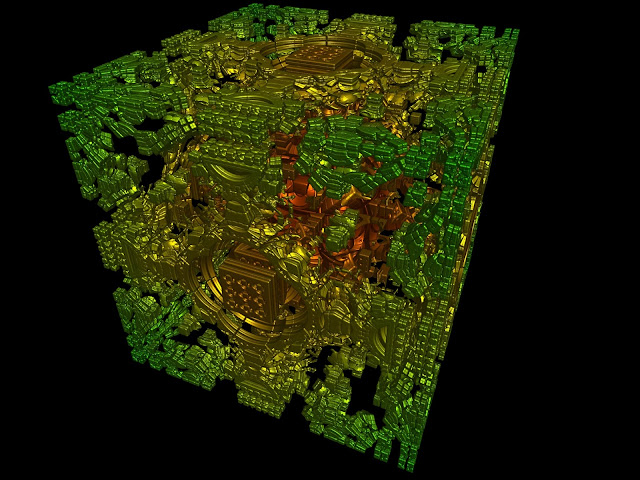

| A raytraced Mandelbox fractal |

|

| A raytraced Mandelbox fractal. |

|

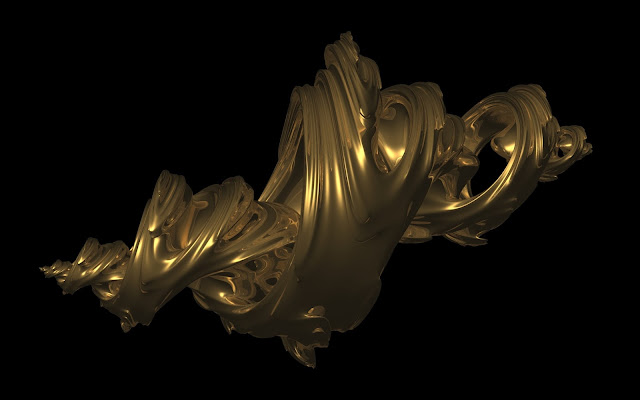

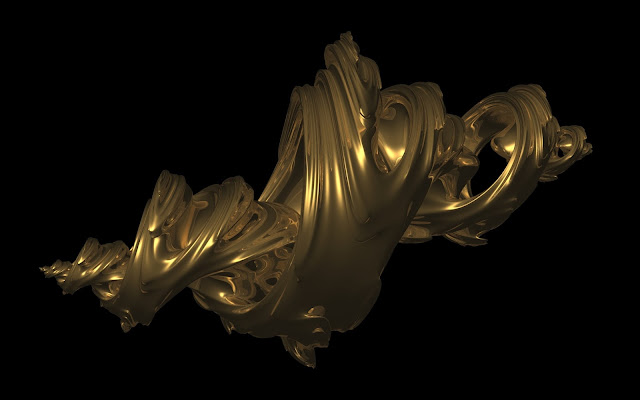

| A raytraced gold 4d quaternion Julia fractal |

|

| Different ways of showing an ATP molecule using raytracing |